Accelerating Systems Trade Studies and lowering human bias using AI and ML

An intelligent research tool that streamlines the engineering design process by automating trade studies and reducing biased decision-making.

Duration: Oct 2022 - Present | Role: Developer, Designer | Current Status: Work in Progress

The Problem

Trade studies are valuable for any project but the process itself can be redundant and repetitive. The redundancy of the process can often lead to reduction in motivation to find the best option out there when performing trade off studies. In short, when trade studies take too long, design suffers.

We often associate feelings with whatever component we’re looking for and that can introduce bias in our decision

Unless there’s a centralized database for that specific component (for ex: McMaster for hardware tools), there’s always a chance that there’s an unexplored (potentially better) option out there. Therefore, a tool that could mine the entire web for a given component and make sense of what the relevant parameters mean would come in more than handy in such a situation. A tool with such capabilities could transform how engineers have approached trade studies using traditional methods in the past.

Data Collection

I was doing an industry sponsored project with the Mechanical Engineering department and realized a pattern I was seeing in most of the trade studies that the team was performing. I decided to reach out to other engineers in various other departments to understood what how they approached trade studies.

In Mechanical Engineering, majority of the trade studies involve finding a component that matches the criteria that your project requirements desire, which lead me to conclude that majority of the ME trade studies resemble the same research process. The process is as follows:

Trade studies in other departments:

After conversing with colleagues in the robotics department, I realized that majority of their research time was devoted to finding out how someone else achieved the task that they were trying to achieve. The majority of the teams spend their time looking for the perfect API that solved a problem. It could be a text-to-speech API that worked solely on the client side, or some geo tracking API that would work in Python and so on and so forth.

This trade off study problem was, in majority of the cases, a three-part problem.

There’s the main ‘component’ they’re looking for, then there are the parameters that they weight against each other and then finally there’s the comparison and filtration. Streamlining this process would not only involve accelerating the process of search and filtration but also an opportunity to reduce bias in the engineering design process.

Bias: the lesser known culprit in engineering

When doing trade off studies we often fall victim to unconscious biases. A relevant example would be when I interviewed one of the Propulsion teams about their trade studies where they were comparing various turbines. They were tempted to choose a turbine that was manufactured by their sponsor (let’s call it Banana Engines; not listed due to NDA), even though their requirements document never mentioned they must choose a turbine manufactured but their sponsor. They were allowed to choose virtually any turbine as long as it accomplished their parameters. As a result, when researching turbines they ended up researching turbines that were made by Banana Engines. Almost all their google searches involved researching turbines that were somehow related to Banana Engines. This meant they had lost out on all the other turbines that could’ve potentially accomplished their parameters. Blancus would prevent such a bias.

Visual appeal

Another common example of bias in decision making is leaning towards the more visually appealing option. This is usually a factor in software capstones. For instance, one of the capstone projects was to design a language learning app. They wanted to research the best menu option orientation for their uncollapsed hamburger menu items. After looking at their trade study I realized that all there parameters were focused on how to make it easy for the user to navigate their menu. However upon interviewing a couple of the team members I discovered even though ‘visual appeal’ wasn’t a factor in their rubric, they still ended up choosing the option that ‘looked the best’.

Solution

An accelerated approach to trade studies would involve reducing bias and streamlining comparisons.

My prototype’s algorithm allows the user to find as much relevant information as possible in a fraction of the time. I leveraged a variety of APIs and text summarization tools (including GPT 3) to get an accelerated comparison. The algorithm produces relevant information for all the parameters that are necessary to make good trade studies decision.

The main home screen for the prototype is displayed below.

When the user inputs in the program what component they want to research/compare, it would try to make sense of what that specific component means in the real world. Unlike Google, which would solely scrape the entire web for the relevant keywords, Blancus tries to come up with relevant parameters related to that product after it understands what the product means leveraging GPT-3, CommonCrawl datasets and OpenAI. During this step, the program is also fine-tuned for whichever component the user is looking for, which prepares the program for to make sense of the upcoming inputs that the user shall input momentarily. Once the program has a sense of what the user wants to research and what the relevant parameters look like, the program then asks the user to select the parameters they are concerned about. Using the similar approach as the previous step, the program would have a few options ready in the backend related to the parameters that the user chose. For example: let’s say we’re looking for RC motors for hobby airplane. The program would look something like this:

The user won’t be shown any of the motors yet in order to reduce bias. Once the user is done selecting the parameter, they’re asked to enter the thresholds for their parameters. For instance, Brushless, 20kV, $200. Now, the algorithms runs again and sorts the motors that get close to those parameters to meet them. And the results would look something like this:

How this reduces bias

The algorithm won’t show the results to the user until the user is done entering their threshold parameters and would keep processing until the list of motors gets close to the parameters listed or meets them. Unlike doing a search on Google where the researcher is immediately shown results which may or may not meet their requirements and then they have to manually filter down to the component that meets their criteria, Blancus will retain the results in the back end until they’re finalized. This retainment encourages users to focus the search solely on the parameters established by them at the beginning rather than unconscious biases that may arise during the course of a traditional search.

Weightage and Relative Importance: Next Challenge

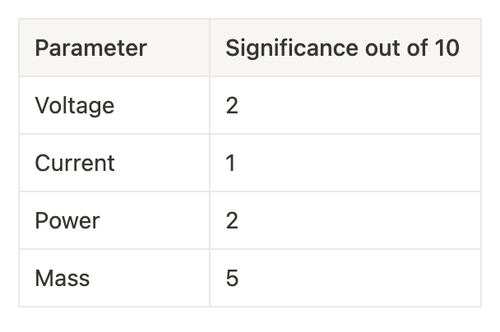

An integral part of the trade study process is assigning relative weightage to different parameters. For example, if engineers are searching for a battery for a Quadcopter and their voltage requirements are less important than the mass requirements, they can ‘trade off’ some points from the voltage parameter to the mass requirements.

Two of the most commonly used methods for normalizing these weightages are the Analytical Hariarchy Process and Linear Normalization Process. With the Linear weighting method, the importance factors are in a range of 1-9, and all of the parameters must add up to 1

The Analytical Hierarchy Process (AHP) is a widely-used tool in the engineering design world, allowing parameters to be compared and evaluated relative to each other. A basic AHP process would look something like this:

I decided to use the AHP normalization since engineers are more used to this, however in the future iterations I can tailor the program to account for various kinds of normalizations.

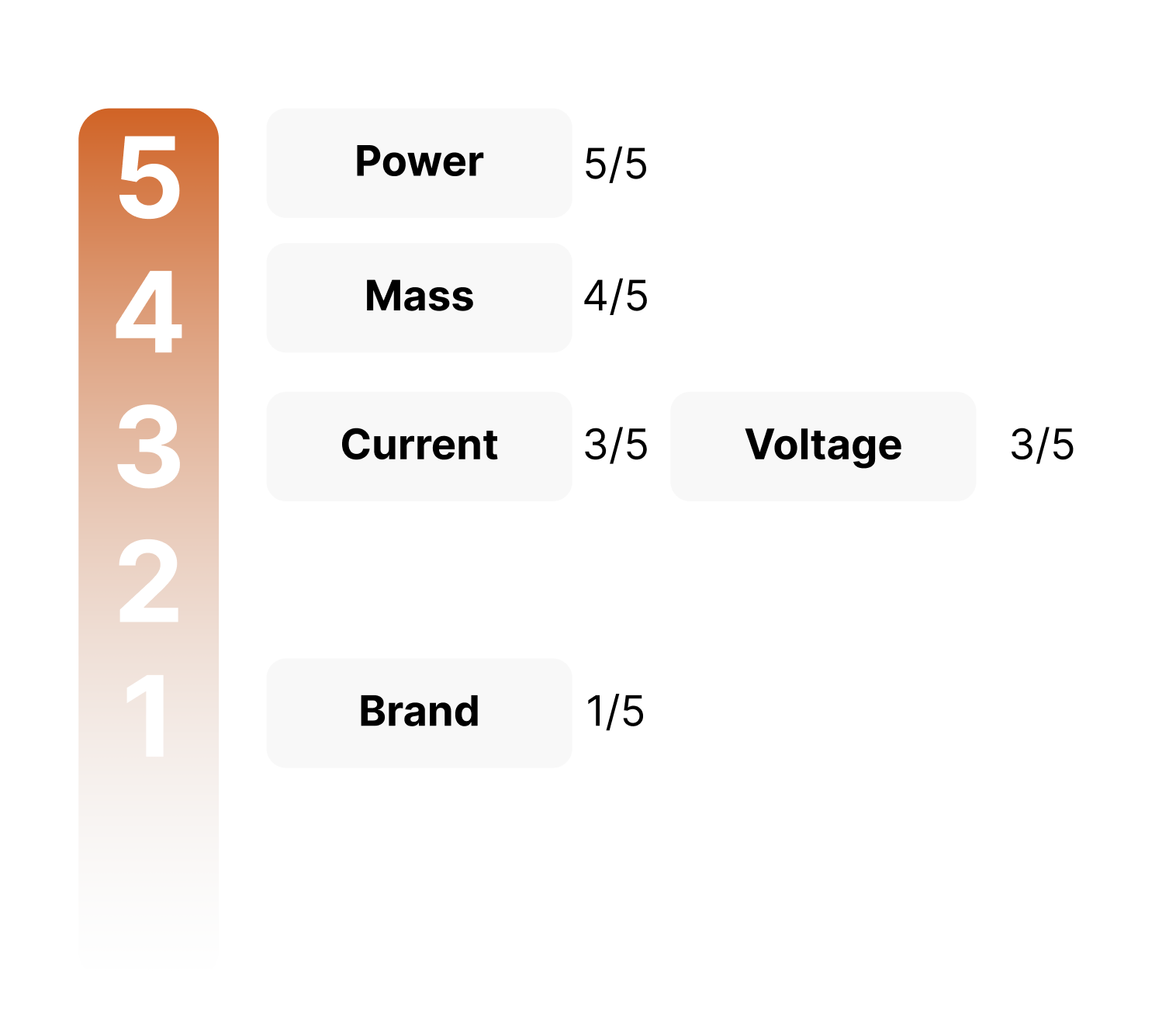

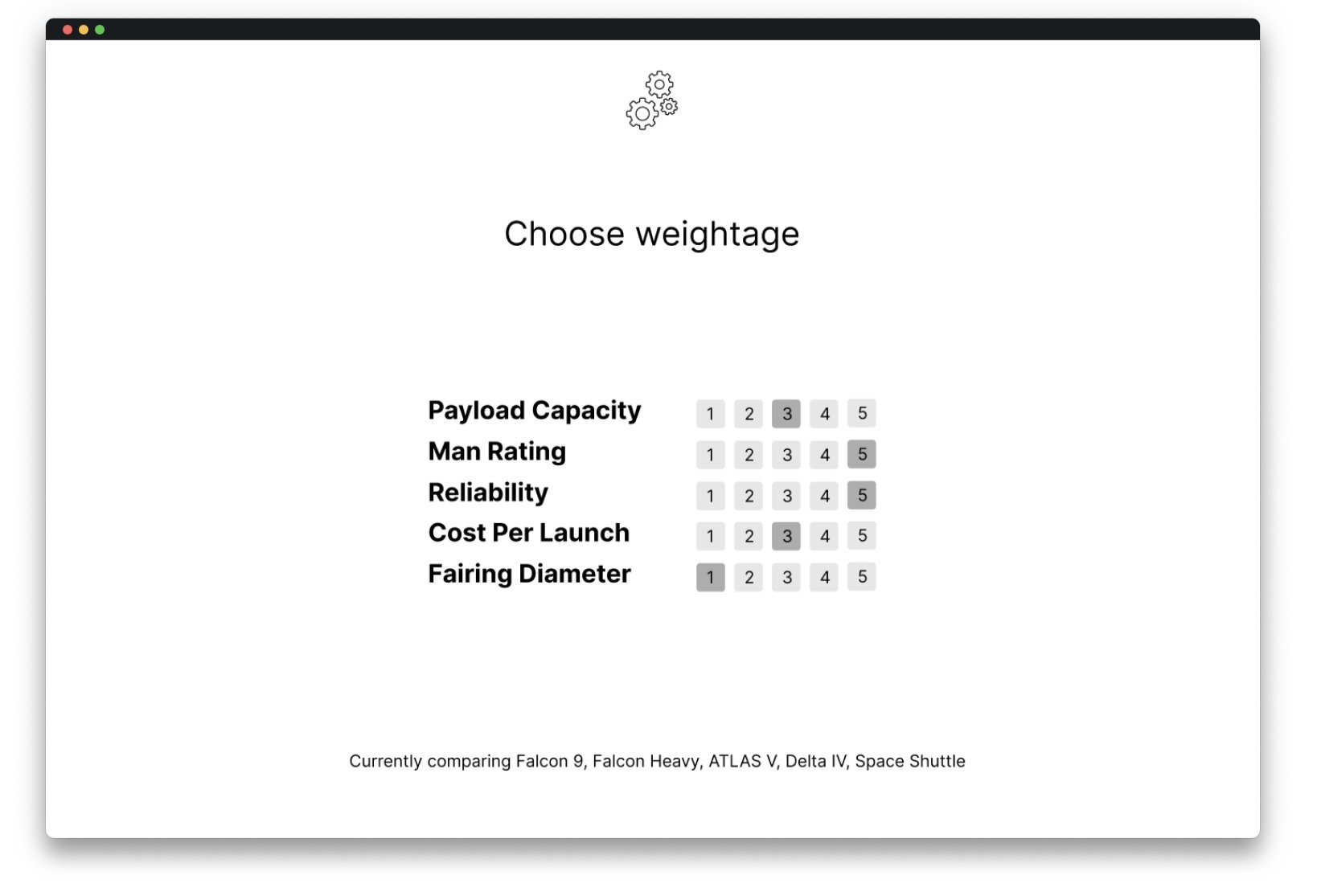

In the Analytical Hierarchy Process (AHP) process, the importance of each parameter is ranked out of a 5 or 10. This allows engineers to ask themselves questions such as ‘Is Power more important to me than Mass?' and rate it on a scale of 1-5, as seen in the figure above.

There are a few important points to consider when using AHP: two parameters can have equal weighting, the top priority is usually reserved for 1-2 parameters for effective trade studies. It is also acceptable to not assign all the importances to all the parameters (for ex: in the above example 2/5 is not assigned to any parameter).

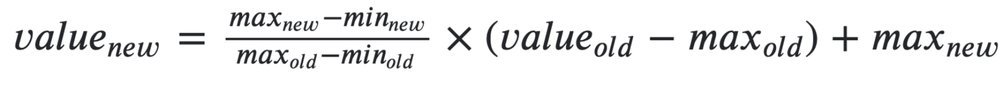

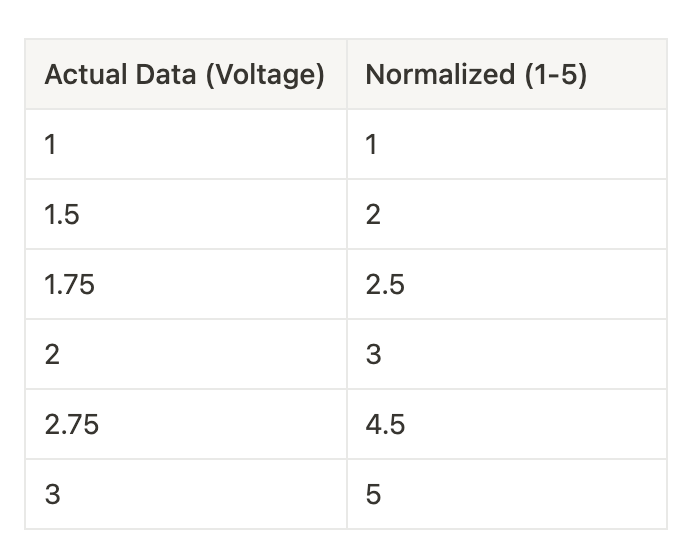

How Blancus Scales and Normalizes Parameters

Blancus uses the standard scaling formula to scale all the parameters between 1-5. If the dataset for Voltage looks like Column 1, the scaled version would like Column 2.

Once all the parameters have been normalized from 1-5, the program then asks the user the relative importance for each parameter as follows:

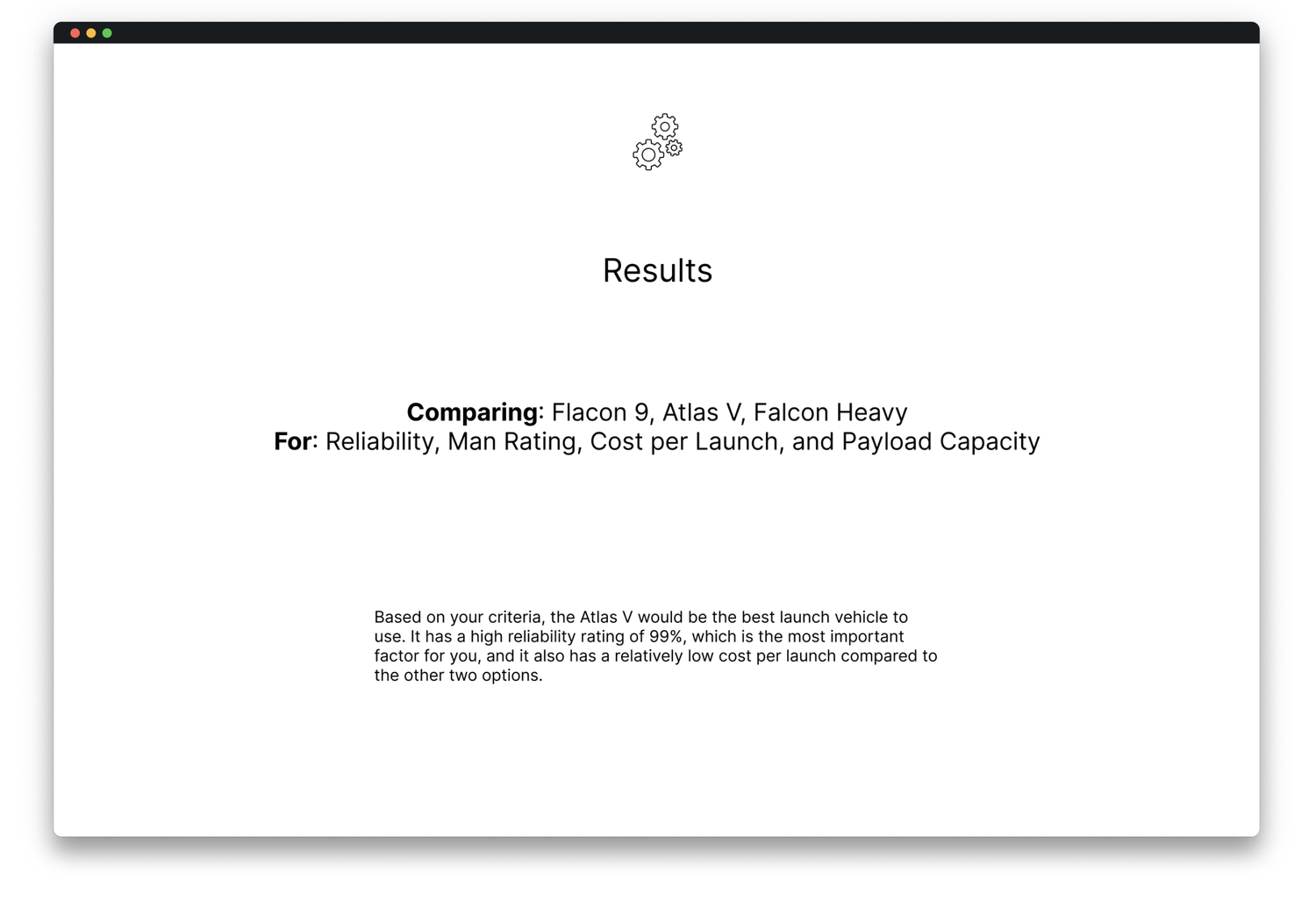

The algorithm processes the inputs and makes calls to GPT-3 model that was fine tuned in the previous step when the user input their component names, and comes to a conclusion which is summarized as follows:

Other comparisons

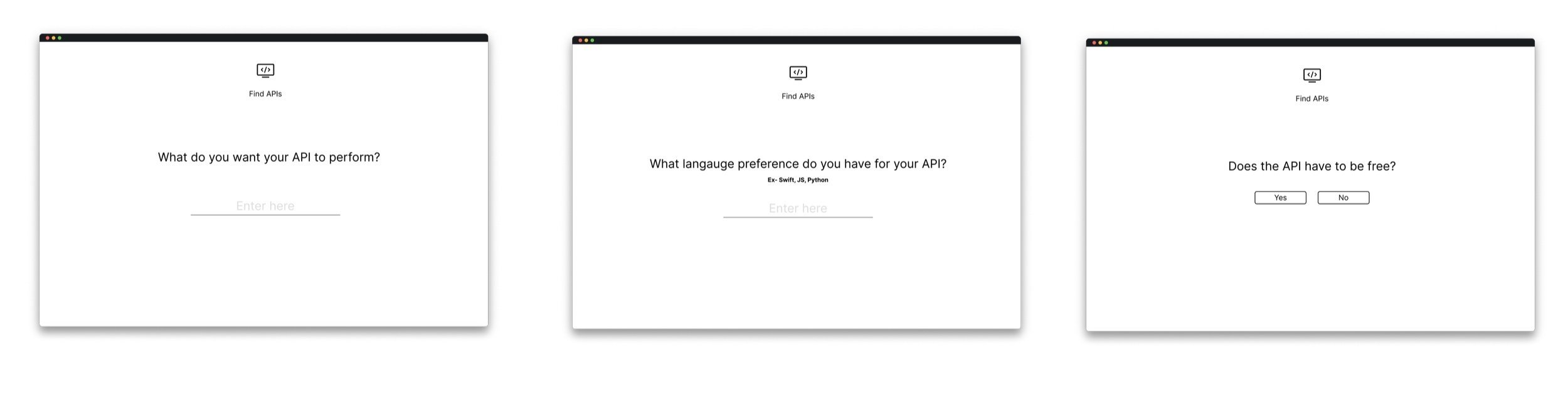

However Blancus doesn’t just work in the field of hardware engineering. I wanted to streamline the process where software engineering students had to research the ideal API for their task in a certain framework for a certain language. I was able to use a slightly altered version of the algorithm to create a prototype that provide the user with API’s that achieve the task they wish to achieve.

A similar approach was taken to find the manufacturers for a given component and also to compare two products/methodologies/tools etc. This doesn’t necessarily build the comparison rubric for the students but is a great starting point for a trade study. The students in my class found this incredibly helpful since it reduced their research time exponentially. This reduction allowed more time to focus on the iterative design process and the creative human experience. The process of going to individual component’s website, gathering data about their specifics and then comparing them was automated by the program. Now, all the user has to do is compare components that are already much closer to the criteria they wish to achieve, rather than starting from scratch.

MVP Demo

Blancus is still a work in progress. To achieve viability, I’m currently;

Trying to leverage Machine Learning to make the response more tailored to the user.

I would achieve that with the help of a script which would store users’s previous searches and try to learn from that to come up with better component suggestions for the users’ upcoming search and simultaneously fine-tune the model.

Working on directly sourcing open-source engineering databases like McMaster-Carr. Blancus can currently leverage what’s on the open web however to make the results more accurate I’m trying to leverage public databases like on McMaster-Carr, NASA and datasets put together by third-parties on sources like Kaggle.